How much do we have to know to predict? do not mistake understanding with prediction! The illusion of validity

“Meehl’s results strongly suggest that any satisfaction you felt with the quality of your judgment was an illusion: the illusion of validity.” Cass R. Sunstein, Daniel Kahneman, and Olivier Sibony

Shortly: not much! and here goes my rationale with all the nuances of the issue! Stick around before making your opinion! Better, make your opinion first and compare with my thinking pattterns, and let me know your thoughts!

I am going to concentrate on mathematical models, and my thoughts are strongly directed to problems in medicine. In case you are trying to guess, I am an applied mathematician/engineering/biomathematician/whatever has to do with mathematical models in life sciences.

It is widely known, with no surprise, that artificial neural networks (NNs) have problems to predict. It means that after you trained your model, with all the care of the world, you can apply to make your predictions: but that it comes with no surprise if something goes wrong, and it is not news to data scientists and related fields. It is repeated by most of the modellers that suggest you to use NNs; even other models you may receive similar advices. The only group of models that comes with a set of information about their predictions are linear regressions, as far as I know, which are quite limited on their applications! You can plot a lower and upper bound to your regression model: not surprisely, it gets broader as you goes into the future; Daniel Kahneman called it objective ignorance, if I can make this adapation.

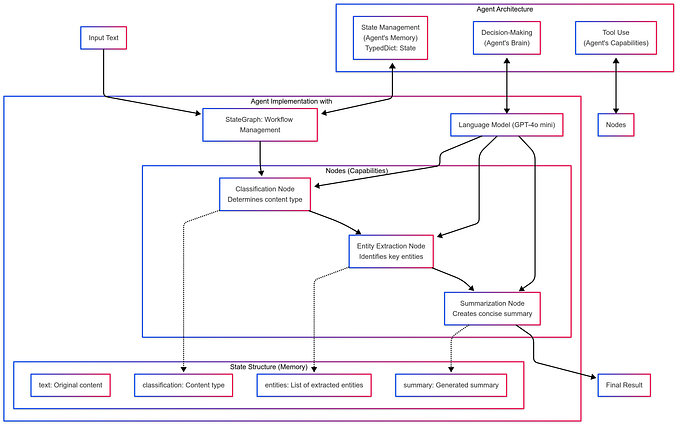

When Daniel Kahneman presents his thoughts, I guess he is talking about what I like to call “black box models”: you have no idea what is going on, but you know it works. That how NNs work! People was resistant for a while to use it due to this lack of explicability; you have no idea how much I heard the argumentation that “we do not use NNs since you cannot explain how it works”; I have the feeling that Google changed everything somehow. I called them, and similar models, “blind models”.

“Meehl’s results strongly suggest that any satisfaction you felt with the quality of your judgment was an illusion: the illusion of validity.” Cass R. Sunstein, Daniel Kahneman, and Olivier Sibony

Complexity vs. simplicity

I have a short discussion about that on my channel.

What surprised me the most regarding the insights from Daniel Kahneman is that, even randomly generated simple models was able to beat human: our complex thougths seem to backfire! I would never have expected that. To be honest, now that I think better, most people that I know and are sucessful, they are “simple minded”, no offense intented; I want to say that they do not know much: maybe knowing too much is bad for the decision process, when it comes to humans. See how we strungle with this age of information, wherein you can know anything at anytime, and still we are as stupid as before, and even more. Michel Desmurget curiously published a book regarding this topic, did not read yet, but seems interesting!

“In one of the three samples, 77% of the ten thousand randomly weighted linear models did better than the human experts. In the other two samples, 100% of the random models outperformed the humans.” Cass R. Sunstein, Daniel Kahneman, and Olivier Sibony

What does that mean for us as mathematical biologists? I see as good news! it means, as I see it, that there are strong evidences that models can replace humans on several decision processes, even better in some situations, and our models can be handy on biomedical problems. In some scenarios, they found that the model was slightly better than human. Still, models are faster, cheaper, and less prone to “mood swings”: I know that we hate the tech guys, as Marshall Rosenberg noted, but still if we have something better, it is a matter of time until it is used; yes, I know about the problems with models nowadays such as racism from Facebook/Instagram algorithms. I am not saying to replace humans in every single aspects of decisions, but just on certain cenarios that human may not be the best options.

Regarding models on the decision process on biomedical problems, see here nice discussions with prof. Ganna.

We still have several strange ways to compete with computers, e.g., we want to have good memory, as a sign of intelligence, it is not! computers have good memory, and it is their job. It was proven that we tempt the memory, stories, and so on! it seems we do the same with decision: it seems we take different decisions based on external factors (for the same problem, a “different weight” for slightly different versions of the same problem), that even we are not always aware of; you may decide differently according to some core values of yours, on scenarios where objectivity may be the best option!

“In what artificial intelligence and robotics experts call Moravec’s

paradox, in chess, as in so many things, what machines are good at is where

humans are weak, and vice versa.” [highlight added ]Deep Thinking, Garry Kasparov“we are in a strange competition both with and against our own creations [intelligent machines] in more ways every day.” Deep Thinking: Where Machine Intelligence Ends and Human Creativity Begins

How to cite?

Pires, J. G.,(2021, December 26). How much do we have to know to predict? do not mistake understanding with prediction! The illusion of validity. Published in Data Driven Investor on Medium. https://medium.datadriveninvestor.com/how-much-do-we-have-to-know-to-predict-1214ef2a9fa1

Change the citation format: https://www.scribbr.com/apa-citation-generator/